Updated at 8:35 p.m. ET

By the time a pro-Trump mob stormed the U.S. Capitol on Jan. 6, fueled by far-right conspiracies and lies about a stolen election, a group of researchers at New York University had been compiling Facebook engagement data for months.

The NYU-based group, Cybersecurity For Democracy, was studying online misinformation — wanting to know how different types of news sources engaged with their audiences on Facebook. After the events of Jan. 6, researcher Laura Edelson expected to see a spike in Facebook users engaging with the day's news, similar to Election Day.

But Edelson, who helped lead the research, said her team noticed a troubling phenomenon.

"The thing was, most of that spike was concentrated among the partisan extremes and misinformation providers," Edelson told NPR's All Things Considered. "And when I really sit back and think about that, I think the idea that on a day like that, which was so scary and so uncertain, that the most extreme and least reputable sources were the ones Facebook users were engaging with, is pretty troubling."

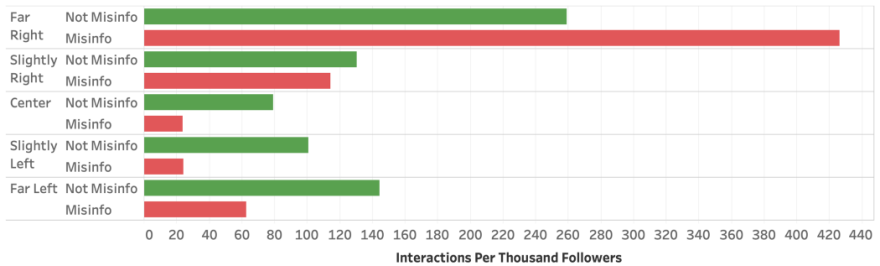

But it wasn't just one day of high engagement. A new study from Cybersecurity For Democracy found that far-right accounts known for spreading misinformation are not only thriving on Facebook, they're actually more successful than other kinds of accounts at getting likes, shares and other forms of user engagement.

It wasn't a small edge, either.

"It's almost twice as much engagement per follower among the sources that have a reputation for spreading misinformation," Edelson said. "So, clearly, that portion of the news ecosystem is behaving very differently."

The research team used CrowdTangle, a Facebook-owned tool that measures engagement, to analyze more than 8 million posts from almost 3,000 news and information sources over a five-month period. Those sources were placed in one of five categories for partisanship — Far Right, Slightly Right, Center, Slightly Left, Far Left — using evaluations from Media Bias/Fact Check and NewsGuard.

Each source was then evaluated on whether it had a history of spreading misinformation or conspiracy theories. What Edelson and her colleagues discovered is what some Facebook critics — and at least one anonymous executive — have been saying for some time: that far-right content is just more engaging. In fact, the study found that among far-right sources, those known for spreading misinformation significantly outperformed non-misinformation sources.

In all other partisan categories, though, "the sources that have a reputation for spreading misinformation just don't engage as well," Edelson said. "There could be a variety of reasons for that, but certainly the simplest explanation would be that users don't find them as credible and don't want to engage with them."

The researchers called this phenomenon the "misinformation penalty."

Facebook has repeatedly promised to address the spread of conspiracies and misinformation on its site. Joe Osborne, a Facebook spokesperson, told NPR in a statement that engagement is not the same as how many people actually see a piece of content. "When you look at the content that gets the most reach across Facebook, it's not at all as partisan as this study suggests," he said.

In response, Edelson called on Facebook to be transparent with how it tracks impressions and promotes content: "They can't say their data leads to a different conclusion but then not make that data public."

"I think what's very clear is that Facebook has a misinformation problem," she said. "I think any system that attempts to promote the most engaging content, from what we call tell, will wind up promoting misinformation."

Editor's note: Facebook is among NPR's financial supporters.

Copyright 2021 NPR. To see more, visit https://www.npr.org.